publications

† denotes equal contribution

see my google scholar profile for latest publications

- working paper

under review Multi-stream STGAN: A Spatiotemporal Image Fusion Model with Improved Temporal TransferabilityFangzheng Lyu†, Zijun Yang†, Chunyuan Diao, and Shaowen Wangworking paper, under reviewSpatiotemporal satellite image fusion aims to generate remote sensing images satisfying both high spatial and temporal resolution by integrating different satellite imagery datasets with distinct spatial and temporal resolutions. Such fusion technique is crucial for numerous applications that require frequent monitoring at fine spatial and temporal scales spanning agriculture, environment, natural resources and disaster management. However, existing fusion models have difficulty accommodating abrupt spatial changes in land cover among images and dealing with temporally distant image data. This study proposes a novel multi-stream spatiotemporal fusion generative adversarial network (STGAN) model for spatiotemporal satellite image fusion that can produce accurate fused images and accommodate substantial temporal differences between the input images.

- journal article

2024 EMET: An emergence-based thermal phenological framework for near real-time crop type mappingZijun Yang, Chunyuan Diao, Feng Gao, and Bo LiISPRS Journal of Photogrammetry and Remote Sensing, 2024Near real-time (NRT) crop type mapping plays a crucial role in modeling crop development, managing food supply chains, and supporting sustainable agriculture. Yet NRT crop type mapping is challenging due to the obstacle in acquiring timely crop type reference labels during the current season for crop mapping model building. Meanwhile, the crop mapping models constructed with historical crop type labels and corresponding satellite imagery may not be applicable to the current season in NRT due to spatiotemporal variability of crop phenology. The difficulty in characterizing crop phenology in NRT remains a significant hurdle in NRT crop type mapping. To tackle these issues, a novel emergence-based thermal phenological framework (EMET) is proposed in this study for field-level NRT crop type mapping. The EMET framework comprises three key components: hybrid deep learning spatiotemporal image fusion, NRT thermal-based crop phenology normalization, and NRT crop type characterization. The EMET framework paves the way for large-scale satellite-based NRT crop type mapping at the field level, which can largely help reduce food market volatility to enhance food security, as well as benefit a variety of agricultural applications to optimize crop management towards more sustainable agricultural production.

- journal article

2023  CropSow: An integrative remotely sensed crop modeling framework for field-level crop planting date estimationYin Liu, Chunyuan Diao, and Zijun YangISPRS Journal of Photogrammetry and Remote Sensing, 2023

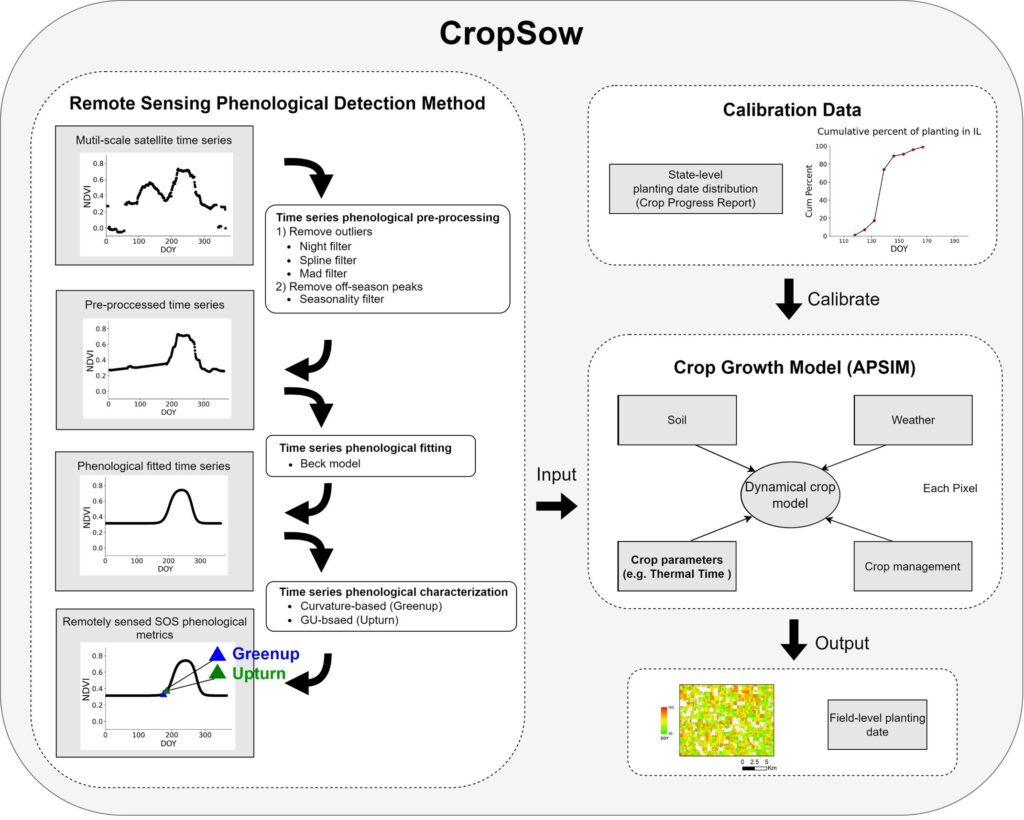

CropSow: An integrative remotely sensed crop modeling framework for field-level crop planting date estimationYin Liu, Chunyuan Diao, and Zijun YangISPRS Journal of Photogrammetry and Remote Sensing, 2023Crop planting timing is critical in regulating environmental conditions of crop growth throughout the season, and is an essential parameter in crop simulation models for estimating dry matter accumulation and yields. Accurate planting date information is key to characterizing crop growing dynamics under varying farming practices and facilitating agricultural adaptation to climate change. To date, the main methods to acquire planting dates include field survey methods, weather-dependent methods, and remote sensing phenological detecting methods. However, it is still challenging to effectively estimate the crop planting dates at field levels due to the lack of appropriate field-level modeling design as well as the dearth of ground planting reference data. In our study, we develop a novel CropSow modeling framework to estimate field-level planting dates by integrating the remote sensing phenological detecting method with the crop growth model. The remote sensing phenological detecting method is devised to retrieve the critical crop phenological metrics of farm fields from remote sensing time series, which are then integrated into the crop growth model for field planting date estimation in consideration of soil-crop-atmosphere continuum. CropSow leverages the rich physiological knowledge embedded in the crop growth model to scalably interpret satellite observations under a variety of environmental and management conditions for field-level planting date retrievals. With corn in Illinois, US as a case study, the developed CropSow outperforms three advanced benchmark models (i.e., the remote sensing accumulative growing degree day method, the weather-dependent method, and the shape model) in crop planting date estimation at the field level, with R square higher than 0.68, root mean square error (RMSE) lower than 10 days, and mean bias error (MBE) around 5 days from 2016 to 2020. It achieves better generalization performance than the benchmark models, as well as stronger adaptability to abnormal weather conditions with more robust performance in estimating the planting dates of farm fields. CropSow holds considerable promise to extrapolate over space and time for estimating the timing of crop planting of individual farm fields at large scales.

@article{RN364, author = {Liu, Yin and Diao, Chunyuan and Yang, Zijun}, title = {CropSow: An integrative remotely sensed crop modeling framework for field-level crop planting date estimation}, journal = {ISPRS Journal of Photogrammetry and Remote Sensing}, volume = {202}, pages = {334-355}, issn = {0924-2716}, year = {2023}, } - journal article

2023 Towards Scalable Within-Season Crop Mapping with Phenology Normalization and Deep LearningZijun Yang, Chunyuan Diao, and Feng GaoIEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2023Crop-type mapping using time-series remote sensing data is crucial for a wide range of agricultural applications. Crop mapping during the growing season is particularly critical in timely monitoring of the agricultural system. Most existing studies focusing on within-season crop mapping leverage historical remote sensing and crop type reference data for model building, due to the difficulty in obtaining timely crop type samples for the current growing season. Yet the crop type samples from previous years may not be used directly considering the diverse patterns of crop phenology across years and locations, which hampers the scalability and transferability of the model to the current season for timely crop mapping. This article proposes an innovative within-season emergence (WISE) phenology normalized deep learning model towards scalable within-season crop mapping. The crop time-series remote sensing data are first normalized by the WISE crop emergence dates before being fed into an attention-based one-dimensional convolutional neural network classifier. Compared to conventional calendar-based approaches, the WISE-phenology normalization approach substantially helps the deep learning crop mapping model accommodate the spatiotemporal variations in crop phenological dynamics. Results in Illinois from 2017 to 2020 indicate that the proposed model outperforms calendar-based approaches and yields over 90% overall accuracy for classifying corn and soybeans at the end of season. During the growing season, the proposed model can give satisfactory performance (85% overall accuracy) one to four weeks earlier than calendar-based approaches. With WISE-phenology normalization, the proposed model exhibits more stable performance across Illinois and can be transferred to different years with enhanced scalability and robustness.

@article{RN296, author = {Yang, Zijun and Diao, Chunyuan and Gao, Feng}, title = {Towards Scalable Within-Season Crop Mapping with Phenology Normalization and Deep Learning}, journal = {IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing}, issn = {1939-1404}, year = {2023}, } - journal article

2023  Monitoring spring leaf phenology of individual trees in a temperate forest fragment with multi-scale satellite time seriesYilun Zhao, Chunyuan Diao, Carol K Augspurger, and Zijun YangRemote Sensing of Environment, 2023

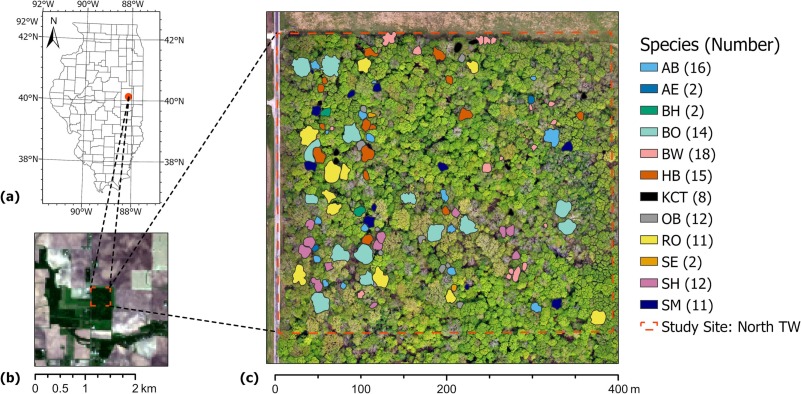

Monitoring spring leaf phenology of individual trees in a temperate forest fragment with multi-scale satellite time seriesYilun Zhao, Chunyuan Diao, Carol K Augspurger, and Zijun YangRemote Sensing of Environment, 2023Forest fragmentation has been increasingly exacerbated by deforestation, urbanization, and agricultural expansion. Monitoring the forest fragments via the lens of tree-crown scale leaf phenology is critical to understand tree species phenological responses to climate change and identify the fragment species vulnerable to environmental disturbance. Despite advances in remote sensing for phenology monitoring, detecting tree-crown scale leaf phenology in fragmented forests remains challenging. Simultaneous tracking of key spring phenological events that are crucial to ecosystem functions and climate change responses is also neglected. To address these challenges, we develop a novel tree-crown scale remote sensing phenological monitoring framework to characterize all the critical spring phenological events of individual trees of deciduous forest fragments, with Trelease Woods in Champaign, Illinois as a case study. The novel framework comprises four components: 1) generate high spatiotemporal resolution fusion imagery from multi-scale satellite time series with a hybrid deep learning fusion model; 2) calibrate PlanetScope imagery time series with fusion data using histogram matching; 3) model tree-crown scale phenology trajectory with a Beck logistic-based method; 4) detect a diversity of tree-crown scale phenological events using several phenological metric extraction methods (i.e., threshold- and curve feature-based methods). Combined with weekly in-situ phenological observations of 123 individual trees across 12 broadleaf species from 2017 to 2020, the framework effectively bridges the satellite- and field-based phenological measures for the key spring phenological events (i.e., budswell, budburst, leaf expansion, and leaf maturity events) at the tree-crown scale, particularly for large individuals (RMSE <1 week for most events). Calibration of PlanetScope imagery using multi-scale satellite fusion data in consideration of landscape fragmentation is critical for monitoring tree phenology of forest fragments. Compared to curve feature-based methods, threshold-based phenometric extraction methods demonstrate enhanced capability in detecting spring leaf phenological dynamics of individual trees. Among the phenological events, full leaf out and early leaf expansion events are retrieved with high accuracy using calibrated PlanetScope time series (RMSE from 3 to 5 days and R-squared higher than 0.8). With both intensive satellite and field phenological efforts, this novel framework is at the forefront of interpreting tree-crown scale remotely sensed phenological metrics in the context of biologically meaningful field phenological events in fragmented forest setting.

@article{RN362, author = {Zhao, Yilun and Diao, Chunyuan and Augspurger, Carol K and Yang, Zijun}, title = {Monitoring spring leaf phenology of individual trees in a temperate forest fragment with multi-scale satellite time series}, journal = {Remote Sensing of Environment}, volume = {297}, pages = {113790}, issn = {0034-4257}, year = {2023}, } - conference paper

2022 CyberGIS for Scalable Remote Sensing Data FusionFangzheng Lyu, Zijun Yang, Zimo Xiao, Chunyuan Diao, Jinwoo Park, and Shaowen WangPractice and Experience in Advanced Research Computing, 2022Satellite remote sensing data products are widely used in many applications and science domains ranging from agriculture and emergency management to Earth and environmental sciences. Researchers have developed sophisticated and computationally intensive models for processing and analyzing such data with varying spatiotemporal resolutions from multiple sources. However, the computational intensity and expertise in using advanced cyberinfrastructure have held back the scalability and reproducibility of such models. To tackle this challenge, this research employs the CyberGIS-Compute middleware to achieve scalable and reproducible remote sensing data fusion across multiple spatiotemporal resolutions by harnessing advanced cyberinfrastructure. CyberGIS-Compute is a cyberGIS middleware framework for conducting computationally intensive geospatial analytics with advanced cyberinfrastructure resources such as those provisioned by XSEDE. Our case study achieved remote sensing data fusion at high spatial and temporal resolutions based on integrating CyberGIS-Compute with a cutting-edge deep learning model. This integrated approach also demonstrates how to achieve computational reproducibility of scalable remote sensing data fusion.

@article{RN361, author = {Lyu, Fangzheng and Yang, Zijun and Xiao, Zimo and Diao, Chunyuan and Park, Jinwoo and Wang, Shaowen}, title = {CyberGIS for Scalable Remote Sensing Data Fusion}, journal = {Practice and Experience in Advanced Research Computing}, pages = {1-4}, year = {2022}, } - journal article

2021 Hybrid phenology matching model for robust crop phenological retrievalChunyuan Diao, Zijun Yang, Feng Gao, Xiaoyang Zhang, and Zhengwei YangISPRS Journal of Photogrammetry and Remote Sensing, 2021Crop phenology regulates seasonal agroecosystem carbon, water, and energy exchanges, and is a key component in empirical and process-based crop models for simulating biogeochemical cycles of farmlands, assessing gross and net primary production, and forecasting the crop yield. The advances in phenology matching models provide a feasible means to monitor crop phenological progress using remote sensing observations, with a priori information of reference shapes and reference phenological transition dates. Yet the underlying geometrical scaling assumption of models, together with the challenge in defining phenological references, hinders the applicability of phenology matching in crop phenological studies. The objective of this study is to develop a novel hybrid phenology matching model to robustly retrieve a diverse spectrum of crop phenological stages using satellite time series. The devised hybrid model leverages the complementary strengths of phenometric extraction methods and phenology matching models. It relaxes the geometrical scaling assumption and can characterize key phenological stages of crop cycles, ranging from farming practice-relevant stages (e.g., planted and harvested) to crop development stages (e.g., emerged and mature). To systematically evaluate the influence of phenological references on phenology matching, four representative phenological reference scenarios under varying levels of phenological calibrations in terms of time and space are further designed with publicly accessible phenological information. The results indicate that the hybrid phenology matching model can achieve high accuracies for estimating corn and soybean phenological growth stages in Illinois, particularly with the year- and region-adjusted phenological reference (R-squared higher than 0.9 and RMSE less than 5 days for most phenological stages). The inter-annual and regional phenological patterns characterized by the hybrid model correspond well with those in the crop progress reports (CPRs) from the USDA National Agricultural Statistics Service (NASS). Compared to the benchmark phenology matching model, the hybrid model is more robust to the decreasing levels of phenological reference calibrations, and is particularly advantageous in retrieving crop early phenological stages (e.g., planted and emerged stages) when the phenological reference information is limited. This innovative hybrid phenology matching model, together with CPR-enabled phenological reference calibrations, holds considerable promise in revealing spatio-temporal patterns of crop phenology over extended geographical regions.

@article{RN251, author = {Diao, Chunyuan and Yang, Zijun and Gao, Feng and Zhang, Xiaoyang and Yang, Zhengwei}, title = {Hybrid phenology matching model for robust crop phenological retrieval}, journal = {ISPRS Journal of Photogrammetry and Remote Sensing}, volume = {181}, pages = {308-326}, issn = {0924-2716}, year = {2021}, } - journal article

2021  A Robust Hybrid Deep Learning Model for Spatiotemporal Image FusionZijun Yang, Chunyuan Diao, and Bo LiRemote Sensing, 2021

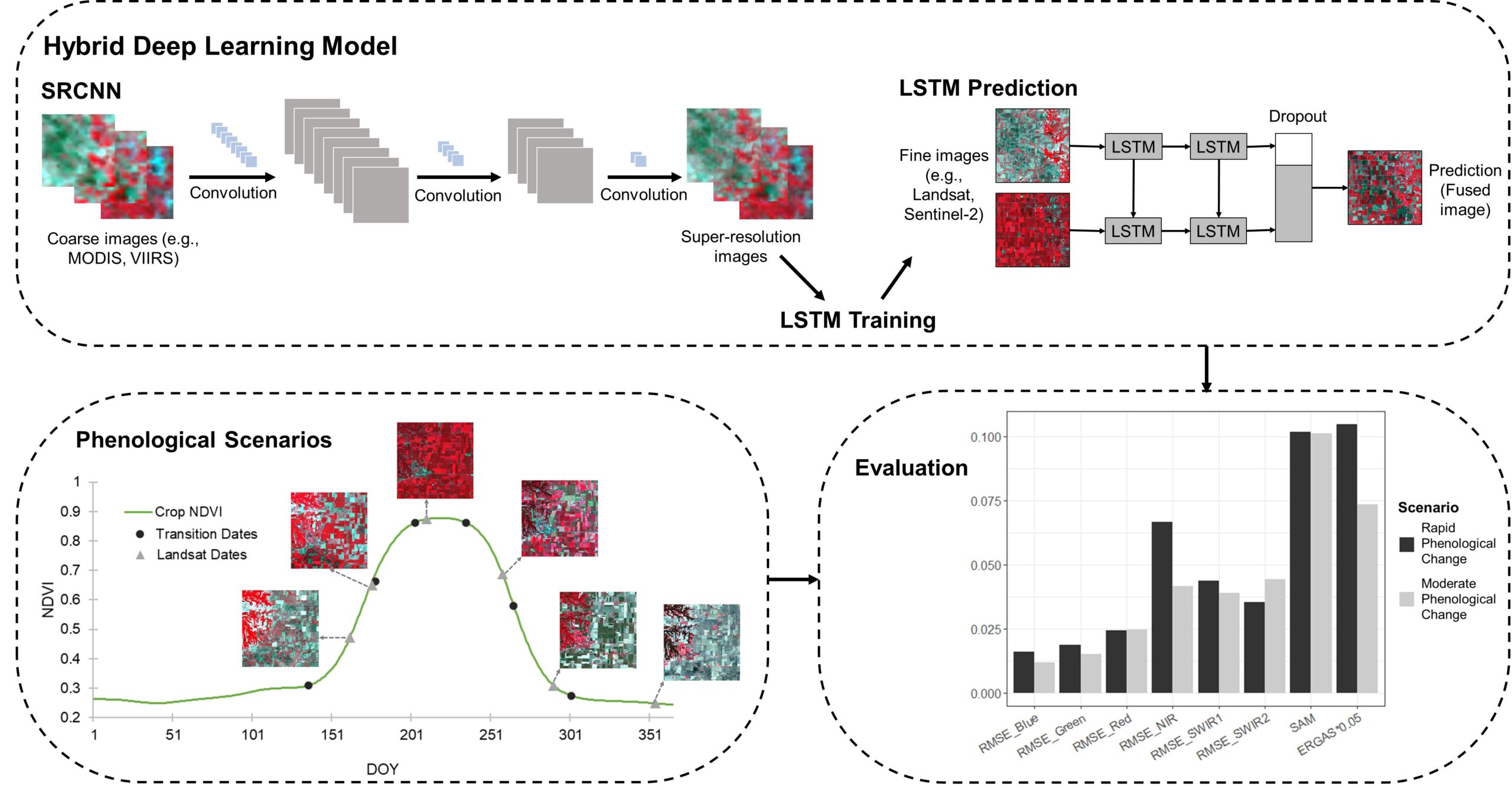

A Robust Hybrid Deep Learning Model for Spatiotemporal Image FusionZijun Yang, Chunyuan Diao, and Bo LiRemote Sensing, 2021Dense time-series remote sensing data with detailed spatial information are highly desired for the monitoring of dynamic earth systems. Due to the sensor tradeoff, most remote sensing systems cannot provide images with both high spatial and temporal resolutions. Spatiotemporal image fusion models provide a feasible solution to generate such a type of satellite imagery, yet existing fusion methods are limited in predicting rapid and/or transient phenological changes. Additionally, a systematic approach to assessing and understanding how varying levels of temporal phenological changes affect fusion results is lacking in spatiotemporal fusion research. The objective of this study is to develop an innovative hybrid deep learning model that can effectively and robustly fuse the satellite imagery of various spatial and temporal resolutions. The proposed model integrates two types of network models: super-resolution convolutional neural network (SRCNN) and long short-term memory (LSTM). SRCNN can enhance the coarse images by restoring degraded spatial details, while LSTM can learn and extract the temporal changing patterns from the time-series images. To systematically assess the effects of varying levels of phenological changes, we identify image phenological transition dates and design three temporal phenological change scenarios representing rapid, moderate, and minimal phenological changes. The hybrid deep learning model, alongside three benchmark fusion models, is assessed in different scenarios of phenological changes. Results indicate the hybrid deep learning model yields significantly better results when rapid or moderate phenological changes are present. It holds great potential in generating high-quality time-series datasets of both high spatial and temporal resolutions, which can further benefit terrestrial system dynamic studies. The innovative approach to understanding phenological changes’ effect will help us better comprehend the strengths and weaknesses of current and future fusion models.

@article{RN248, title = {A Robust Hybrid Deep Learning Model for Spatiotemporal Image Fusion}, author = {Yang, Zijun and Diao, Chunyuan and Li, Bo}, journal = {Remote Sensing}, volume = {13}, number = {24}, pages = {5005}, year = {2021}, }